Online Translation Use in Spanish as a Foreign Language Essay Writing: Effects on Fluency, Complexity and Accuracy

El uso de traducción automática en la escritura en español como lengua extranjera: Efectos en fluidez, complejidad y corrección

El uso de traducción automática en la escritura en español como lengua extranjera: Efectos en fluidez, complejidad y corrección

RESUMEN

Este estudio examina la escritura de composiciones usando ordenadores en dos grupos (n=32+35, edades 17-18) de estudiantes suecos del español como lengua extranjera. Uno de los grupos tenía acceso libre a internet, el otro no. El artículo está enfocado en los efectos del uso de traducción automática (TA) sobre la fluidez de la escritura y sobre la complejidad y corrección gramaticales y lexicales. Algunos efectos pequeños pero estadísticamente significativos fueron encontrados. Hasta cierto punto, los efectos sobre la fluidez y la complejidad, pero no sobre la corrección, pueden ser explicados por el nivel de conocimiento del español de los estudiantes. Los estudiantes que usaron TA produjeron menos errores de ortografía y de concordancia entre artículos, sustantivos y adjetivos, pero más errores de sintaxis y de morfología verbal. Esto contradice la idea de los estudiantes de que la TA pudiera ayudarles a mejorar la sintaxis y a conjugar los verbos. Un mejor conocimiento del idioma es indicado como un prerrequisito para que la TA pueda ser competentemente usada. Se recomienda más investigación sobre efectos longitudinales sobre el aprendizaje de vocabulario y sobre la corrección gramatical.

Palabras clave: traducción automática, escritura en lenguas extranjeras, español como lengua extranjera, CAF

ABSTRACT

This study examines computer-based essay writing in Spanish as a foreign language among two groups of pupils in Sweden (n=32+25, aged 17-18), one group with Internet access, one without. The article focusses on effects of online translation (OT) on writing fluency, and on grammatical and lexical complexity and accuracy. Small but statistically significant effects were found. Effects on fluency and complexity, but not accuracy, can to some extent be explained by the pupils' proficiency level, rather than by the use of OT. Pupils using OT made fewer mistakes regarding orthography and article/noun/adjective agreement, but more mistakes regarding syntax and verb morphology. This contradicts the participants' belief that OT helped them improving syntax and verb inflection. Better language proficiency is pointed out as necessary to be able to make good use of OT. Further research on longitudinal effects of OT on pupils’ learning of vocabulary and grammatical accuracy are recommended.

Keywords: Online translation, Foreign language writing, Spanish as a foreign language, CAF

Fecha de recepción: 10 septiembre de 2014

Fecha de aceptación: 7 diciembre de 2014

1. Introduction

Looking as a foreign language teacher at pupils’ writing abilities, one might sometimes, perhaps somewhat cynically, be inclined to share the views of Britton et al. (1975:39; quoted in Flower et al., 1981: 366), writing:

Teachers feeling a need to improve their pupils’ foreign language writing (FLW) skills are probably inclined to look for different ways to do so, and may be interested in digitalising their teaching – or feel pushed to do so (e.g. Tallvid et al., 2009; Fredriksson, 2011). In an earlier study on pupils’ attitudes towards computer-based grammar exercises (Fredholm, 2014), the participants showed varying degrees of reticence towards the computer-based exercises, questioning especially their instructive value, but were largely in favour of using computers for FLW (cf. Ayres, 2010). Taking this as a point of departure, the present study examines computer-based essay writing in Spanish as a foreign language in two groups of pupils in a Swedish upper secondary school, exploring specifically the use of online translation and its possible effects on fluency, complexity and accuracy.

According to Warschauer et al. (2010), writing may be an area where pupils benefit from using computers (provided that they are used in pedagogically sound ways, something that, of course, goes for all subjects). In Sweden, where the author of this article is working as a teacher of Italian, Spanish and Chinese, 250 out of the country’s 290 municipalities reported in 2013 that they had implemented or were about to implement 1:1-projects, providing pupils and teachers with individual laptops (Grönlund, 2014). This is, naturally, not unique to Sweden; after the first large-scale project in Maine (e.g. Silvernail et al., 2007), similar investments in digitalising schools have been seen in many places, such as Texas (e.g. Shapley et al., 2010) or Uruguay, that started early (cf. Departamento de Monitoreo y Evaluación del Plan Ceibal, 2011), and many developing countries through the One Laptop per Child-programme (‘One Laptop per Child’, 2014). The increase in computer access is often believed to facilitate school improvement, focussing generally on pupils’ academic results. Pro-computer voices also claim that an increase in technology use might motivate otherwise demotivated pupils – especially boys – to engage more in studies, at school as well as outside of school (Beastall, 2006; Buckingham, 2011). However, despite substantial investments, after at least two decades of school computerisation, few conclusive results can be seen as regards improved pupil performance, neither internationally nor in the local Swedish context (Cuban, 2001; Beastall, 2006; Buckingham, 2011; Cobo Romaní et al., 2011; Cristia et al., 2012; Scheuermann et al., 2012; Fleischer, 2013; Grönlund, 2014).

The role that grammar and grammatical accuracy play in foreign language teaching is, as ever, a much discussed topic, in a Swedish context and internationally (cf., e.g., Ciapuscio, 2002; López Rama et al., 2012). Muncie (2002) discusses the changing approaches to FLW that we have seen since the 1970’s, stating that process and genre writing have led to a lesser focus on grammar, but stresses that “[g]rammar is just as important an instrument of communication as content, and a text cannot be written cohesively without attention being paid to how meaning is expressed through the grammar” (p. 183). Communicative competence has sometimes been regarded as something opposed to knowledge of grammatical forms, whereas supporters of the focus on form theory generally see no such discrepancy (cf. e.g. Ellis et al., 2002; Gaspar et al., 2003; Nassaji et al., 2004; Sánchez, 2008). There may also have been a tendency to somewhat overlook writing in favour of oral competencies (Cadierno, 1995; Burston, 2001a; Ciapuscio, 2002). In Sweden, the role of grammar in the teaching of modern languages is somewhat strengthened in the new curriculum of 2011 (Skolverket, 2013a) as compared to the previous of 1994 (Skolverket, 2014a); however, the curriculum does not state in detail which grammatical features should be studied when, leaving it up to the teachers to deduce what grammar content is needed e.g. to master the production of text genres mentioned in the curriculum. These factors may influence the teaching of FLW and pupils’ capabilities to express themselves in writing.

Several researchers stress that pupils need a certain level of grammatical competence in order to be able to use technology such as online translation (OT) or grammar checkers (Vernon, 2000; cf., e.g. Potter et al., 2008). This is discussed in further detail in Fredholm (submitted). The use of machine translation or online translation in young pupils’ FLW has so far not been studied to any great extent, but can be assumed to be common and, probably, increasing as free services such as Google translate develop and computers are more frequently used at schools (cf. e.g. Steding, 2009). A summary of previous research on machine translation can be found in Niño (2009), and, more comprehensively, in the recent doctoral thesis of O’Neill (2012), probably the major study in the area so far. As O’Neill points out, there are, hitherto, quite few studies done on the use and impact of online translation (OT), automated translation (AT) or machine translation (MT) in foreign language writing.1 Studying the impact of OT on students’ French essay writing, he concludes that OT does not necessarily lead to texts of inferior quality (considering aspects such as grammatical accuracy, and general content of the essays), as many teachers might fear; whether it is a good method to learn to write in an FL, or to improve one’s knowledge of and proficiency in the studied language, is, however, another question altogether. According to O’Neill (2012), discussing Iwai’s research (1999), the use of computers and OT may enable pupils to focus more on content than on grammar or on retrieving words from memory, which might lead to a richer or more varied vocabulary usage. O’Neill’s findings are not conclusive in this area; it seems that OT does not negatively affect content, but that it cannot be said with certainty to improve it either (cf. O’Neill, 2012: 123).

Already in 1991, Hawisher et al. wrote that it is necessary to take into account not only the possibilities afforded by the use of technology when teaching writing, but the risks as well. This is a point of view repeatedly mentioned by many others, such as, recently, Hyland (2013), who talks about the pressure on teachers to use new technologies in their teaching, and about the need to critically examining the technology. If teachers are to make good use of the technologies available in the classroom, they need to be able to evaluate their pedagogical affordances. The present study was designed to address this issue.

1.1 The Swedish School System and Spanish as a Foreign Language

The Swedish school system comprises nine years of compulsory school (most often preceded by one or several years of pre-school) and three years of upper secondary school, the gymnasium, which is not compulsory but attended by the majority of pupils (Skolverket, 2014b). With the school reform of 2011, a new grading system was introduced, comprising six grade levels ranging from A to F, A being the highest. The lowest pass grade is E (Skolverket, 2013b).

English is the first foreign language taught at all schools, generally from the third year or earlier, and is compulsory. Every pupil has the opportunity to study at least one other foreign language. Traditionally, German and French have been the most common languages taught, but since Spanish was introduced as an option in 1994, its popularity has increased rapidly and it is now studied by about 50 % of the pupils, although drop-out rates, especially among boys, are quite high (Lodeiro et al., 2014; Francia et al., 2013). The number of pupils studying Spanish often lead to big and heterogeneous groups, and the demand for qualified teachers is still to be met. The first European survey of 15-year olds’ proficiency in foreign languages (European Commission, 2012), albeit criticised (cf. Erickson et al., 2012), indicated low levels of Spanish knowledge among Swedish pupils, especially regarding writing skills.

2. Purpose of the Present Study

The main purpose of the present study is to examine how the use of OT affects fluency, complexity and accuracy in essay writing in Spanish as a foreign language. Comparisons are made with a control group also writing their essays on computers, but without access to the Internet.

The overarching research questions are, thus:

- Does the use of OT affect fluency, complexity and accuracy in essays written in Spanish as a foreign language by upper secondary school pupils?

- If so, how?

Other online resources such as Wikipedia searches and the use of verb conjugation sites did co-occur with OT use; as these uses were rare and do not seem to have made any discernible impact on the essays, they will not be dealt with in this article. The use of Microsoft Word’s grammar and spell checkers, which was very restricted and made little impact on the texts, will be mentioned briefly. Other questions regarding how and why pupils use different strategies to write in Spanish as a foreign language are discussed in Fredholm (submitted).

3. Methods and Participants

3.1. Essay Writing and Screen Recordings

Once a month from September to December of 2013, two groups (henceforth “the online group” and “the offline group”) of Swedish pupils studying Spanish as a foreign language2 were asked to write essays on topics related to the content of the national curriculum of modern languages (Skolverket, 2013a), as a part of their Spanish course. They wrote on their personal laptops provided by the school the previous year. The online group was allowed to access anything they wanted on the Internet, whereas the offline group was not allowed to use any online resources. The online group also had the Spanish spell and grammar checker functions of Microsoft Word installed on their computers, whereas the offline group did not. The 57 participating pupils handed in a total number of 171 essays. As it became evident that many pupils in the offline group had accessed the Internet and used OT for a large part of their first essay, and for a smaller number of the remaining essays, the affected texts were discarded, leaving a number of 112 essays (84 from the online group, 28 from the offline group), as is summarised below in Table I. The teachers had access to the essays for grading or to use them in other ways in their teaching and assessment.

Table I. Participants, essays and screencasts

The pupils, aged 17 – 18, were studying in the second year of the Swedish upper secondary school’s Social Sciences study programme. It was the first semester of their fifth or sixth year3 of Spanish studies, at the national curriculum level IV, corresponding roughly to the B1 level of the Common European Framework of Reference for Languages (Council of Europe, 2001). The gender distribution of the online group was fairly even, while the offline group only counted two male pupils. Grades from the previous year’s Spanish course were somewhat higher in the online group, although with pupils caught not following instructions in the offline group removed from the study, as mentioned above, grade levels were very evenly distributed between the groups, with 25 % high-achievers and 75 % low-achievers in the online group, 26 % high-achievers and 74 % low-achievers in the offline group.4 Most of the offline group pupils removed from the study had low grades, especially E and F.

The writing tasks were distributed and handed in electronically via a folder open to the teachers and to the researcher on the school’s learning management system (www.itslearning.com). The pupils had 30 minutes to write each essay, with an additional five minutes to read and understand the topic, to have a final look-through of their texts, to save them and hand them in. To reduce stress, the researcher made clear in the beginning of each writing session that there was no need to complete the essay.5

The pupils were asked to record their computer screens while writing, using the online screencast service screencast-o-matic.6 Due to technical problems, every pupil was not able to do this. A few screencast files were also damaged or not correctly saved. All in all, 26 pupils from the online group and 7 from the offline group handed in screencasts of 60 essay writing sessions, amounting to approximately 29 hours of screen films. Out of these, 42 recordings were analysed, the remaining being flawed or corresponding essays in two cases missing. The screencasts were handed in via the pupils’ Google drive accounts7 and watched by the researcher, with special focus on the screencasts from a focus group of pupils who were later interviewed. Most of the lessons were also observed by the researcher. The teacher of the online group was present on one occasion, the teacher of the offline group on all occasions.

3.2. Writing Tasks

As Puranik et al. (2008: 108) point out, “[t]here is no consensus in the literature regarding the best way to collect a written language sample”. The pupils were motivated to participate in the present study knowing that the tasks would be a way to prepare for the written part of the Swedish national test in Spanish, normally held in May. The four tasks, common for both groups, aimed thus to integrate text genres from the national curriculum of foreign languages (Skolverket, 2013a) and to reflect written prompts normally found in the national test. Using several different prompts “the effects of individual prompts on the quality of writing” (Hinkel, 2002: 61) was reduced.

In summary, the tasks were as follows:

- Respond to a letter from a 19-year-old boy asking for advice on how to make his friends drink less alcohol and take interest in other activities.

- Retell the fairy-tale of Little Red Riding-Hood as if you were the heroine, 60 years later, talking to your grand-child.

- Write about traditions and customs typical of your country, and how they may have changed over time. Would you like any traditions to change or to disappear, and if so, why?

- Discuss your view of today’s school, explaining what you would like the school of tomorrow to be like.8

The tasks were designed to make the use of past tenses and the subjunctive mood possible (but not necessarily obligatory) in each topic. The pupils expressed that the first and the third topics were somewhat easier than the other two, all being, however, quite difficult. Quite a few pupils could not understand the prompts or important parts of them without referring to OT or a dictionary.

3.3. Surveys and Interviews

All of the participating pupils were asked after the fourth essay writing to fill out a short online survey regarding their attitudes towards and views on writing in Spanish and the use of ICT. 20 pupils from the online group answered the survey and 23 from the offline group, leaving a response rate of 75%.

Thirteen pupils (6 from the online group, 7 from the offline group) also volunteered to be interviewed in small groups. The recordings of the interviews were later (partly) transcribed for a qualitative content analysis. The results of the surveys and the interviews will be presented in a separate article focussing on the writing strategies used by the pupils (Fredholm, submitted).

4. Definitions and Adopted Measures of Fluency, Complexity and Accuracy

The 112 essays were thoroughly read up to ten times by the researcher and analysed for errors and inadequacies regarding morphology, syntax, lexicopragmatic features and orthography, as explained in further detail below. The data thus obtained were entered in SPSS and submitted to a t-test for inferential statistical analysis (with p<0.05) between groups A and B and between high- and low-achieving pupils (defined as explained in note 4). The accuracy of the statistical analysis was verified by an independent researcher.

When there is a lack of consensus in previous research whether some of the adopted measures are to be considered as measures of complexity, accuracy or fluency, the recommendations of Wolfe-Quintero et al. (1998) and of Bulté et al. (2012) were followed.

4.1. Fluency Measures

There is a lack of consensus on what constitutes fluency (Wolfe-Quintero et al., 1998, chap. 1, 2; Abdel Latif, 2009; Gunnarsson, 2012). One way of looking at it is to measure the length of texts produced during a certain amount of time (Lennon, 1990; cf. Wolfe-Quintero et al., 1998: 13), which is the method adopted in the present study. A more detailed analysis, looking e.g. at the ratio and length of pauses and length of bursts of writing (cf. e.g. Gunnarsson, 2012), could not be done with the collected data.

Given that the pupils in the present study did not all use the same amount of time to write their essays, (a few finished their texts earlier, and some started to write during the additional five minutes allocated for reading instructions), this measure is not entirely unproblematic, albeit the majority of pupils used the same amount of time (30 minutes). Considering also the fact that every pupil in the online group chose to automatically translate large parts of their texts, the measure might also be considered as one of technology management skills rather than of FLW fluency. (The offline group pupils who chose not to use any translation tools did, on the other hand, show more of their actual writing skills and fluency.) In any case, text length will be used as background information on the produced essays, and is the only fluency measure that can be used with the collected data.

4.2. COMPLEXITY MEASURES

A number of measures, outlined in Wolfe-Quintero et al., (1998) and Bulté et al. (2012), were chosen to shed light on the “[s]urface manifestations of grammatical [and lexical] complexity in L2 performance” (Bulté et al., 2012: 27-28).

4.2.1. Grammatical Complexity

The terms grammatical, morphological and syntactic complexity are to some extent interchangeable in the literature and are not equally subdivided by different researchers. In this study, “grammar” refers to morphology (cf. Gunnarsson, 2012), whereas “syntax” denotes clause and sentence structure, and word order. The subdivision of grammatical complexity into morphological and syntactic adopted by Bulté et al. (2012) is the one used also in this study.

Morphological complexity was measured as the number of different verb forms (tenses and moods) present in the essays. Syntactic complexity was first measured as the number of sentences in each essay and the mean number of words per sentence, a measure recommended by Szmrecsányi (2004; cf. Burston, 2001; Wolfe-Quintero et al., 1998). A sentence was considered as a graphic unit of words between two full stops, which in most of the cases coincided with what could normally be considered a pragmatically coherent utterance, although clause boundaries and clause types were often unclear. Headlines were counted as sentences only when invented by pupils, i.e. not copied from writing task instructions.

An attempt was made to also use the ratio of dependent clauses to total number of clauses, used e.g. by Hirano (1991, ref. Wolfe-Quintero, chap. 4). Determining this ratio proved difficult, due to the sometimes unclear clause structures produced by the pupils. This led to the need to introduce a category of syntactically unclear clauses, used mainly for presumably dependent clauses without any subordinating connective element, or introduced by a clearly erroneous one, such as “No puedes cambiar tus amigos, es la primera cosa tienes que recordar” (You cannot change your friends, it is the first thing you have to remember, essay A17:1) where “tienes que recordar” (you have to remember) was considered as a relative clause lacking the connector “que” (that). The difficulty to determine clause types was also the reason for choosing to use sentences over T-units.9

4.2.2. Lexical Complexity

Lexical complexity (or lexical density and diversity; cf. Bulté et al., 2012: 28) was primarily measured using a type/token ratio (TTR) in a random sample of 48 words from each essay (the number of lemmas per 48 words). The reason for choosing 48 words was that this was the number of words in the shortest essay.

The TTR has been criticised for not taking into account how differences in text length affect the results (something to a certain degree avoided in the present study, though, as the same number of words was chosen). A version of Guiraud’s Index (Wolfe-Quintero et al., 1998: 107), dividing the number of word types (in the entire essays, ranging from 48 to 290 words) by the square root of two times the total number of words, was subsequently adopted to double-check the findings.

4.3. Accuracy Measures

Researchers hold different views on what to count as an error (Wolfe-Quintero et al., 1998: 35-36), and the use of error-free measures has been criticised (e.g. Bardovi-Harlig et al., 1989). Several studies have instead analysed “how many errors occur in relation to production units such as words” (Wolfe-Quintero et al., 1998: 36). This is the method approached in the present study, where the ratio of morphosyntactic, lexical and spelling errors per a hundred words was established. As Wolfe-Quintero et al. (1998) and Gunnarsson (2012) point out, counting errors in beginners’ or intermediate learners’ productions is not always the best way to go, as errors will naturally be frequent and are part of the learning process; however, for the present study it was considered of interest to see not only how many errors were produced in each of the two groups, but also if there were any differences between the groups in frequency of different kinds of errors.

Morphosyntactic errors were judged as such following normative guidelines found in Spanish grammars (e.g. Alarcos Llorach et al., 1994; and, more common in the Swedish context, Fält, 2000); pragmatic errors such as context-inappropriate word choices were judged by the researcher and, when in doubt, a native Spanish speaking colleague. Any spelling resulting in a non-existing Spanish word was considered a misspelling (cf. Rimrott et al., 2005), apart from words clearly written in Swedish or English that were considered as wrong word choice.

Tables II and III (below) outline a subdivision of the different error categories used to measure accuracy in the essays. Unless otherwise stated, the ratio of errors per a hundred words were counted. It may be argued that some error categories might belong to more than one category, such as some of the syntactic error categories that may well be seen also as pragmatic errors. The subdivision should be considered an attempt to categorise the different error types, not as an absolute subdivision of them.

4.3.1. Grammatical Accuracy

The essays were analysed for all kinds of morphological and syntactic errors that could be found in them. The range of error types are listed in Table 2. Regarding syntax, the ratio of syntactically correct sentences to the total number of sentences was also used (cf. Wolfe-Quintero et al., 1998: 47).

Table II. Grammatical error categories

4.3.2. Lexicopragmatic Accuracy

This category examines both surface errors such as orthography, and errors concerning choice of words and a pragmatically adequate use of the words in the context, as specified in Table III.

Table III. Lexicopragmatic error categories

5. RESULTS

5.1. Introduction

After a short comment on the pupils’ writing strategies, serving as a background to the results, differences between the two groups will first be discussed regarding writing fluency, and thereafter concerning accuracy and complexity.

5.1.1. Observed Essay Writing Strategies

A detailed discussion of the observed writing strategies will be published in Fredholm (submitted). Suffice it here to give a short overview of the strategies used by the pupils, in order to better understand their writing behaviours.

The use of printed dictionaries (Benson et al., 2000) was very restricted in the online group (one pupil used it on one occasion), but more common in the offline group, although several pupils preferred not to use it as they considered looking up words too time-consuming or too difficult. OT was used mostly to translate phrases, and somewhat less frequently to translate single words, as opposed to translation strategies observed by O’Neill (2012) and Clifford et al. (2013). A few pupils also translated entire paragraphs, one of them copying a short dialogue from an Internet site. OT was also used to translate Spanish text into Swedish to double-check its meaning. Many pupils trusted OT and the Word grammar checker to be able to correct the grammar in the essays, especially concerning verb forms, and depended frequently upon OT to build sentences, which they reported to be one of the most difficult things when writing in Spanish.

Wikipedia and Google were used to search for information, and to find out how to write ¿ and ñ. Online thesauri and the one in Microsoft Word were consulted to a small extent to find better translations of single words. One pupil used the Google picture search to look up pictures of translations she was not sure of.

The use of the grammar and spell checkers in Word increased during the study, and helped many pupils in the online group to ameliorate above all the spelling, although many did not check the marked errors. Many errors were automatically corrected by the program, without intervention from the pupils. The grammar checker was not much used and made little impact on the accuracy of the texts.

5.1.2. Number of Automatically Translated Words

In the online group essays that were screenshot, 44 % of the words were translated using OT (almost entirely Google Translate13 and the similar site Lexikon2414). The percentage of automatically translated words in a single essay ranged from 6 % to 100 %. Judging from classroom observations, it is plausible that OT use was similar among the pupils who did not manage to hand in screencasts. The number of machine-translated words seems not to be correlated to the grade levels (p=0.810). There were considerable individual differences among the pupils, albeit parsimonious OT users were generally found among the high-achievers.15

5.2. Results on Fluency of Writing

The essays varied in length from 50 to 290 words in the online group (mean length=135 words), from 48 to 270 words in the offline group (mean length=113 words), a non-significant difference (p=0.063). At a first glance, the difference may look easily explained by the fact that pupils of the offline group took longer to look up words, especially as many of them proved to be uncertain of how to use a dictionary, and used more time to think through how to phrase a sentence (cf. Garcia et al., 2011). However, if the discarded offline group pupils who did not follow instructions and used OT are included in the analysis, the mean number of words in essays from the offline group decreases to 102 (p=<0.001). This indicates that the use of OT by itself cannot explain differences in essay length. A majority of the discarded participants had low grades in Spanish from the previous year (grades E and, in a few cases, D), which might explain for a lower level of writing fluency; two of the pupils, nevertheless, had the highest grade from the previous year, an A, and one pupil had a C. Overall, text length is significantly correlated to grade levels from the previous year (p=<0.001), high-achievers writing longer texts, notwithstanding that there were important variations of text length within each grade level.

5.3. Results Regarding Complexity

5.3.1. Morphological Complexity

As mentioned earlier, the number of different verb forms present in the essays was used as a measure of grammatical complexity. On an average, the online group essays contained 4.85 different verb forms, the offline group essays 3.89. The difference is statistically significant (p=0.011). The most frequent verb forms were the present tense indicative, the infinitive, the perfect indicative, the preterit, the imperfect indicative and the periphrastic future indicative. The gerund did only occur in the online group essays, as did the perfect subjunctive, the imperfect subjunctive and the conditional. All of these forms were very rare also in the online group essays and seem to be the effect of OT use rather than of the pupils’ proficiency level in Spanish. The forms are not always used correctly, especially not the conditional.

The only verb forms that were more common, on average, in the offline group essays were perfect participles (often incorrectly used without an auxiliary verb), non-existent verb forms (i.e. verb endings or verb stem forms that do not exist in the Spanish language), and the imperfect indicative. The first two cases can be explained by the fact that the offline group pupils received no help to conjugate verbs and sometimes forgot the auxiliary verb needed to form the perfect tense or simply mistook the verb ending or the way to handle the verb stem. It is more difficult to explain why they also used the imperfect tense more, but it is possible that the offline group had worked more recently in the classes with this tense and were thus more prone to using it.

5.3.2. Syntactic Complexity

The average number of sentences per essay was 11.90 in the online group essays and 10.57 in the offline group (p=0.173). The average sentence length in the online group was 11.70 words and 10.94 words in the offline group (p=0.288). Differences at sentence level were thus small and non-significant. It deserves to be remembered also that the number of words per sentence does not automatically indicate sentence complexity, as many of the less proficient pupils wrote quite long sentences, simply coordinating independent clauses, something generally considered as a sign of a low level of grammatical complexity in writers (cf. Wolfe-Quintero et al., 1998: 73).

When attempting to look at the number of clauses and the distribution of dependent and independent clauses, there are significant differences between the groups, the online group producing more dependent and independent clauses, and more clauses per sentence, as specified in Table IV. As mentioned above, a caveat not to forget is the highly unclear clause structure produced by many pupils, which made it quite difficult to deduce what to count as a what kind of clause. The category of syntactically unclear clauses, used when the nature of a clause could not be unequivocally established, was slightly more frequent in the online group (mean number 1.54 per essay) than in the offline group (1.04), but the difference in mean numbers between the groups is not significant (p=0.137). There were quite big differences, albeit non-significant (p=0.071), between essays regarding the total number of syntactically unclear clauses, ranging from none to ten such clauses per essay.

Table IV. Clause distribution in the online group and the offline group essays

5.3.3. Lexical Complexity

In the present study, no immediate differences in the ways the pupils approached the essay topics can be seen between the groups (nor, to any greater extent, within the groups), most of them writing more or less the same things and presenting similar ideas, with no clearly discernible differences in vocabulary.16 The essays’ lexical complexity, variation or diversity (for a discussion on terminology in this field, see e.g. Yu, 2009, and Malvern et al., 2004, chap. 1) was first analysed using a type/token ratio where the unique lemmatised words in a randomly chosen text chunk of 48 words were counted (forty-eight words being the length of the shortest essay), a method previously used by e.g. Arnaud (1992).17 This was done for all essays, and the average number of unique words per 48 words was then calculated for each group. The result was exactly the same in both groups: 34.04 unique words (or 71% of all words). The fact that both groups ended up with the exact same mean number of unique words is rather unexpected, considering the fact that the online group had immediate access to OT.

Now, there are many ways to count lexical variation18, none of them perfect – especially not when it comes to evaluating lexical variation in short texts or texts of highly differing lengths, such as the essays analysed in the present study. An adaptation of Guiraud’s Index (Wolfe-Quintero et al., 1998: 107), dividing the number of word types (in the entire essays) by the square root of two times the total number of words, was also used, and rendered a small difference between the groups, A receiving a lexical variation value of 4.0807, and B a value of 3.8719. This seems to indicate that OT might enhance the range of vocabulary in written texts (which does not necessarily mean, though, that pupils actually learn more words). The numbers above are, however, difficult to interpret and seem in any case to show very small differences between the groups. The small differences in lexical variation that exist within each group, and more specifically among the OT users in the online group (where, on an average, high-achievers produced 35.11 different words, low-achievers 33.69), is more clearly correlated to the pupils’ grade levels (p=0.022), than to the amount of OT used, where no significant correlation can be seen (p=0.271).

5.4. Results on Accuracy

Regarding accuracy, an initial hypothesis was that the online group would commit more grammatical and syntactic errors, whereas the offline group, having to cope without spelling and grammar checker assistance, would make more errors in terms of orthography (cf. O’Neill, 2012) and simpler grammatical errors such as article-noun-adjective agreement errors etc.

In the following paragraphs, features concerning accuracy in the essays will be presented and discussed, with special focus on significant differences between the groups. The reader should of course remember that, as Vernon (2000: 347) eloquently puts it, “correct writing is not necessarily good writing”; an analysis of employed ideas and other text qualities must however be left to another article.

5.4.1. Morphological Accuracy

The morphological errors constituted 57 % of all errors in the online group essays, 65 % in the essays of the offline group. Significant or near-significant differences in morphological accuracy were found only concerning verb mood (p=0.007), where the online group made more mistakes19, and noun/adjective and noun/article agreement (p=0.052 and 0.000 respectively), where the offline group made more mistakes.

Regarding verb mood, this can be compared to the fact that the online group used more verb forms and were thus at a greater risk of using them incorrectly. It does indicate, however, that neither the OT nor the grammar checker of Word were able to always analyse sentence context and supply the correct verb mood. It should also be noted that the offline group pupils hardly used any subjunctives, and very few conditionals, most likely because they hadn’t learnt those forms yet; furthermore, they seldom produced clauses that would require the use of the subjunctive mood. It may be argued that OT can help pupils to gain insight in more difficult Spanish clause and sentence structures (something that several pupils commented on in the interviews); however, as the OT made several mistakes as regards the appropriate mode use, the pedagogic value is questionable.

Table V sums up the most frequent morphological errors, with examples from some of the essays. Only one error type (underlined) is marked in each example.

Table V. Most frequent morphological errors

5.4.2. Syntactic Accuracy

The ratio of syntactically correct sentences to the total number of sentences was measured as an indicator of the pupils’ ability to produce syntactically accurate sentences.20 On average, the online group produced 3.18 correct sentences per essay, the offline group 2.18, rendering a ratio of 25.87% correct sentences to the total number of sentences in the online group essays, 16.93% in the offline group, a significant difference (p=0.013). A majority of the correct sentences are, however, quite short and syntactically simple, such as “¡Hola Pablo” Hi Pablo!, or “¡Buena Suerte, Adiós!” Good Luck, Goodbye! (both from essay A8:1). Furthermore, several of the syntactically correct sentences contained other errors, such as misspellings or, more common, inadequate word choices such as “pleno verano” full summer instead of “San Juan” midsummer, or “tren” train instead of “desfile” or “cortejo” procession.

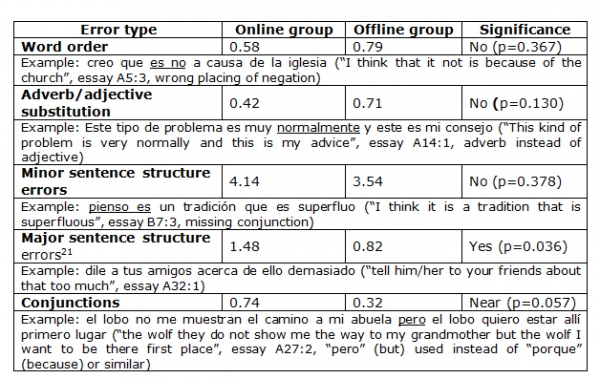

Both groups struggled with forming syntactically correct (or sometimes, indeed, comprehensible) phrases or sentences, many pupils commenting that they did not know how to construct a sentence in Spanish, and that they often relied on OT to get a hint on acceptable sentence structure. This is reflected in the number of syntactic errors, which made up 27 % of all errors in the texts from the online group, 25 % in the offline group (a non-significant difference, p=0.444). The most common error type was minor sentence structure errors, such as the wrong use of grammatical particles or connectors (especially de and que), the omission of these kinds of words or the superfluous addition of them. 17 % of all errors in the online group and 15 % in the offline group belonged to this category. Other errors regarded word order (2 % in the online group, 3 % in the offline group, p=0.367), substituting adverbs with adjectives or vice versa (2 % in the online group, 3 % in the offline group, p=0.130) and major sentence structure errors, i.e. errors on clause and sentence level leading to incomprehensible or nearly incomprehensible text chunks (6 % in the online group, 4 % the offline group, p=0.036). Differences in correct use of conjunctions approaches significance (p=0.057), with more mistakes in the online group. A category that needed to be introduced during the analysis, consisting in “major sentence structure errors”, was found to be common, and there is a significant difference between the groups, A making more of these mistakes (p=0.036). A summary of the syntactic error types with examples are shown in Table VI.

Table VI. Summary and examples of syntactical error types

The use of OT does not, thus, seem to lead to any greater differences as far as simpler errors are concerned; the only clearly significant difference can be seen with major sentence structure errors, which rendered the understanding of the texts difficult. This might be explained in the present study by the fact that many pupils in the offline group chose not to try to write more complex sentences, thus avoiding many of the pitfalls encountered in the online group, where complex sentences were translated automatically, without many of the pupils having sufficient proficiency in Spanish to be certain of the correctness of the translations, or to be able to ameliorate them. Another explanation might be, however, that without being able to resort to automated help, pupils from the offline group had to plan their writing and think their sentences through better. Both explanations seem to be true, among different pupils, judging from the questionnaire and the interviews

5.4.3. Lexical and Pragmatic Accuracy

This category concerns the correct and coherent choice of words, correct spelling and the consistent use of forms of address, an error frequently found in some of the essays.

5.4.3.1. Choice of Words and Pragmatics

Both groups made errors concerning context-appropriate choice of words, an error type that constituted 11 % of all errors in the online group essays, 10 % in the offline group. There were a mean of 2.62 contextually wrong word uses in the online group essays and slightly fewer at 2.36 in the offline group. The difference is not significant (p=0.635). Counted as a ratio of wrong words to the total number of words, 1.93% of the words in the online group essays were incorrectly used, 2.08% of the words in the offline group essays (p=0.403).

Many of the word choice errors are due to context-inappropriate OTs or choosing the wrong option in the dictionary, especially when a Swedish word is translated to two or more Spanish words, such as “mandar” (to send) instead of “transmitir” (to broadcast), both “sända” in Swedish. Pupils in the online group did show awareness of this problem and resorted in quite a few cases to double-checking translations and suggestions to alternative translations, although few were capable of choosing the most appropriate alternative.

In the first essay, written as a letter to a 19-year-old boy, a large number of the online group pupils were inconsistent in their forms of address, changing back and forth between informal 2nd and formal 3rd person (tú and usted). This confusing pronoun and verb form use was almost never seen in subsequent essays, where pupils did not necessarily have to address the reader directly. It was present to a minor degree in the offline group essays, but as all of their first essays had to be discarded, comparisons cannot be made between the groups. It seems plausible, though, that the number of inconsistencies depended on the use of technology, as the screencasts revealed that the OTs many times translated the Swedish du (“you”, 2nd person, informal) to Spanish usted (3rd person, formal), although not always. The pupils did not seem to react to this.22

5.4.3.2. Orthographic Accuracy

The most obvious difference between the groups is the number of misspellings. The pupils of the online group made a mean of 1.20 spelling errors per essay, whereas the pupils of the offline group on an average made 7.75 spelling errors per essay (or 0.99 % of the words misspelled in the online group, 7.41 % in the offline group; p=<0.001). The result was expected for several reasons (cf. Heift et al., 2007; Figueredo et al., 2006; Steding, 2009). Firstly, the offline group had no access to a spell checker. The auto-correction function of Word used by the online group also contributed to reducing the number of misplaced or omitted accent marks and other spelling errors. Secondly, as many of the pupils of the offline group, according to their survey answers and the interviews, felt that they had too little time to write, and considered looking up words in a dictionary too time-consuming, they more than once chose to rely on their own knowledge or intuition rather than to look up semi-familiar words an extra time in the dictionary. Thirdly, this perceived time constraint may of course also have contributed to a less careful handling of the keyboard, increasing the number of lapses.

6. Discussion

The present study suggests that some significant differences may be discerned regarding different aspects of fluency, complexity and accuracy between essays written with or without OT. The technology seems to be capable of compensating for errors of simpler order, such as article-noun-adjective agreement, but less capable of correcting more complex errors, such as faltering syntax, lack of cohesive devices or the choice of verb moods and aspects. These errors are often among the ones that mostly impede comprehension of the texts, which makes them the more problematic – and the more important to pay explicit attention to when teaching pupils to write in Spanish as a foreign language.

What becomes clear, looking at the results, is that the use of OT in itself does not seem to bring about dramatic changes in texts, regarding fluency, complexity or accuracy, neither improvements nor deteriorations. This is in line with O’Neill’s (2012) findings. The texts from the online group did become slightly longer, with a slightly higher number of sentences and independent clauses, and the number of misspelled words was significantly reduced (thanks both to OT and to the spell checker of Word); apart from the number of misspellings, however, the differences between the groups were generally small. It is apparent that many differences were less related to technology use than to the pupils’ previous proficiency level of Spanish, and, to some extent, to the pupils’ ability to effectively handle the technology and avoid its pitfalls. As Hyland (2013) points out, “[h]elp in using the thesaurus, and spelling and grammar checkers is also essential to avoid an overreliance on these very fallible features and their ad hoc, de-contextualized advice” (p. 148).

The pupils who managed to use technology competently were high-achievers who, it might be assumed, would have been able to write well anyway. Now, it may be said that OT and tools such as the spell and grammar checkers can be used to improve low-achievers’ FLW, as it helps them to communicate more (i.e. to write longer texts) and marginally better (as far as CAF is concerned; style and idea generating, as mentioned above, are other concerns not addressed here), according to Garcia et al. (2011); however, the authors also state that OT use may not lead to increased learning and might make pupils more “lazy” (p. 486). It remains unclear, thus, whether technology use in the long run helps pupils – especially low-achievers – to become better writers or in any way better Spanish speakers (cf. Larson-Guenette, 2013). The results of the present study show no clear indication of any progress during the four months it lasted, but a longer longitudinal study involving pre- and post-tests on e.g. vocabulary knowledge and retention, and on grammatical accuracy, could perhaps further elucidate whether OT results in any lasting effects on the pupils’ knowledge of the language. As many pupils stated that they thought that OT was a good tool to provide them with much-needed assistance of Spanish syntax, this might be an especially interesting field to study further. It clearly also remains an area to be better addressed by Spanish teachers in Swedish schools; albeit many of the texts produced by the pupils in the present study, at least partially, might be considered “good enough” for a native Spanish speaker to understand them (cf. Aiken et al., 2011), the results can often be said not to reflect the goals presented in the curriculum. Likewise, the spell checker, properly used (cf. Heift et al., 2007), may contribute to pupils’ learning of foreign language orthography; the longitudinal effects do, however, need to be further studied (cf. Leacock et al., 2010).

No matter what views teachers and researchers hold on OT, it is probably naïve to try to forbid its use. Language teachers working in digitalised school environments will have to learn the affordances and the flaws of the technology, in order to be able to teach their pupils how to use it in ways that sustain and, possibly, improve the language learning (cf. Niño, 2009; O’Neill, 2012; Larson-Guenette, 2013) – or, as Steding (2009: 179) eloquently puts it: “What students usually do not understand is that it is not the educational goal of writing assignments in a language class to simply translate from L1 to L2.”. Further studies can hopefully shed more light on the potentials and limitations of OT in foreign language learning.

Finally, one cannot help but think that a neo-Luddite (cf. Roszak, 1994) – if there are any left – would probably say that in order to improve their Spanish writing, pupils need first and foremost not to enhance their technology use, but in fact to improve their knowledge of the Spanish language. Whether this can be done through a better use of OT and other digitalised writing resources is still an open research field, and an important one as language teachers and learners face the increasing school digitalisation.

1 The present study follows O’Neill’s (2012) use of terminology. Machine translation (MT) and automated translation (AT) are understood as all sorts of automated translation technology; this includes online translation, but encompasses also software not available online, such as digitalised versions of traditional dictionaries, special handheld translation devices etc., whereas online translation (OT) only comprises translation services found on the internet (often for free), such as Google translate, Babelfish and others.

2 For most of the participants, Spanish was their third language after Swedish and English. A few of them had another mother tongue than Swedish (they did however not hand in any essays a part from a single one in the offline group), and a few pupils stated they had two mother tongues, Swedish nevertheless being the stronger one.

3 Swedish lower secondary schools can choose whether the pupils start studying a third language in year 6 or 7.

4 As mentioned earlier, the Swedish grading system introduced in 2011 (Skolverket, 2013b) consists of a scale from A to F, E being the lowest pass grade. In the present study, pupils with the grades E, D and C were considered low-achievers, those with a B or an A as high-achievers. It could be argued that C is quite a high grade in the Swedish system, but given the overall weak results of the participating pupils, the researcher chose to consider them as low-achievers.

5 To some pupils, a time-on-task of 30 minutes was nevertheless considered too short. These pupils were very few, though. The writing time was chosen to make the analysis of the material possible for the researcher and to reduce the amount of time taken from the pupils’ ordinary lessons. A writing time of 30 minutes can be considered within a normal range, considering Silva’s meta-analysis of 72 writing studies (Silva, 1993). For a brief discussion on how much time to allow for an essay, see Hinkel (2002: 62–63).

6 See www.screencast-o-matic.com.

7 The use of Google drive was necessary as it turned out that the school’s learning management system could not handle large files.

8 These are summarised translations of the prompts given in Spanish.

9 T-units have furthermore been criticised for being developed for research on children’s writing; Bardovi-Harlig (1992) suggested that sentences be used as a unit in analyses of adolescent and adult writer’s texts (cf. Polio, 2008).

10 In this category was included the verbs ser, estar, haber and tener.

11 This was considered as a syntactic rather than a morphological error, as the use of adverbs or adjectives depend on the syntactic context of the sentence.

12 This and the following category was considered as a part of pragmatic errors as the produced sentences were syntactically and morphologically correct, showing however an inconsistent use of forms of address, as will be commented further on.

13 See https://translate.google.se/ for the Swedish version of the site, most commonly used by the participants.

14 See http://www.lexikon24.nu/.

15 There is one outlier among the high-achievers, who frequently used OT. If he is removed, the correlation between OT use and grade levels approaches significance, with p=0.073.

16 Complexity of ideas and of structuring of ideas (including coherence and textual cohesion) is beyond the scope of this article.

17 Wolfe-Quintero (2004) et al. advice against the use of TTR, “unless there is either a time limit or a conceptual limit on production” (p. 103). These criteria were met in the present study.

18 For an extensive list of used measures, see Wolfe-Quintero et al. (1998, chap. 5).

19 This could be considered partly as a syntactic error as well, considering the fact that the use of verb mode is highly depending on the syntactic context of the sentence.

20 As explained above, sentences were chosen over the more commonly used T-unit as the many missing connective elements made it difficult to discern what was intended as for example an independent or dependent clause. Sentences considered as syntactically correct often contained morphological and lexicopragmatic errors, as well as misspelled words.

21 Many of these phrases also contain pragmatic errors as well as several grammatical errors. All phrases that could not be unequivocally corrected or understood were placed in this category.

22 The fact that the online group had a native Latin-American teacher, frequently using ustedes (3rd person plural) rather than vosotros (2nd person plural, used in Spain) to address the class, might have made the pupils more familiar with 3rd person forms of address, thus not reacting to them as much when they saw them in the text.

Abdel Latif, Muhammad M. (2009), Toward a New Process-Based Indicator for Measuring Writing Fluency: Evidence from L2 Writers’ Think-Aloud Protocols. Canadian Modern Language Review/ La Revue canadienne des langues vivantes, 65(4): 531-558.

Aiken, Milam and Balan, Shilpa (2011), An Analysis of Google Translate Accuracy. Translation Journal. Available at http://translationjournal.net/journal/56google.htm [2 December 2013].

Alarcos Llorach, Emilio and Real academia española (1994), Gramática de la lengua española. Madrid: Espasa.

Arnaud, P.J.L. (1992), Objective Lexical and Grammatical Characteristics of L2 Written Compositions and the Validity of Separate-Component Tests, 133-145, in: Arnaud, P. J. L. and Bejoint, H. (Eds.), Vocabulary and applied linguistics. London: Macmillan.

Ayres, Robert (2010), Learner Attitudes towards the Use of CALL. Computer Assisted Language Learning, 15(3): 241-249.

Bardovi-Harlig, Kathleen (1992), A Second Look at T-Unit Analysis: Reconsidering the Sentence. TESOL Quarterly, 26(2): 390-395.

Bardovi-Harlig, Kathleen and Bofman, Theodora (1989), Attainment of Syntactic and Morphological Accuracy by Advanced Language Learners. Studies in Second Language Acquisition, 11(01): 17-34.

Beastall, Liz (2006), Enchanting a Disenchanted Child: Revolutionising the Means of Education Using Information and Communication Technology and E-Learning. British Journal of Sociology of Education, 27(1): 97-110.

Benson, Ken, Strandvik, Ingemar and Santos Melero, María Esperanza (Eds.) (2000), Norstedts spanska ordbok. Stockholm: Norstedts.

Britton, James and et al. (1975), The Development of Writing Abilities. London: Macmillan.

Buckingham, David (2011), Beyond Technology: Children’s Learning in the Age of Digital Culture. Cambridge: Polity Press.

Bulté, Bram and Housen, Alex (2012), Defining and Operationalising L2 Complexity, 21-46, in: Housen, A., Kuiken, F., and Vedder, I. (Eds.), Dimensions of L2 Performance and Proficiency. Language Learning & Language Teaching. Amsterdam / Philadelphia: John Benjamins Publishing Company.

Burston, Jack (2001a), Exploiting the Potential of a Computer-Based Grammar Checker in Conjunction with Self-Monitoring Strategies with Advanced Level Students of French. Calico Journal, 18(3): 499-515.

Burston, Jack (2001b), Computer-Mediated Feedback in Composition Correction. CALICO Journal, 19(1): 37-50.

Cadierno, Teresa (1995), El aprendizaje y la enseñanza de la gramática en el español como segunda lengua. Reale, (4): 67-85.

Ciapuscio, Guiomar E. (2002), El lugar de la gramática en la producción de textos, in: Simposio Internacional ‘Lectura y escritura: nuevos desafíos’. Mendoza, Argentina. Available at http://www.uruguayeduca.edu.uy/UserFiles/P0001/File/el_lugar_de_la_gramatica_en_la_produccion_de_textos.pdf [15 August 2013].

Clifford, Joan, Merschel, Lisa and Munné, Joan (2013), Tanteando El Terreno: ¿Cuál Es El Papel de La Traducción Automática En El Aprendizaje de Idiomas? @tic. revista d’innovació educativa, 0(10). Available at http://ojs/index.php/attic/article/view/2228 [26 March 2014].

Cobo Romaní, Cristóbal and Moravec, John W. (2011), Aprendizaje Invisible. Hacia una nueva ecología de la educación. Barcelona: Col·lecció Transmedia XXI. Laboratori de Mitjans Interactius / Publicacions i Edicions de la Universitat de Barcelona.

Council of Europe (2001), The Common European Framework for Language Learning. Available at http://www.coe.int/t/dg4/education/elp/elp-reg/Source/Key_reference/CEFR_EN.pdf [16 April 2013].

Cristia, Julián P., Ibarrarán, Pablo, Cueto, Santiago, Santiago, Ana and Severín, Eugenio (2012), Tecnología y desarrollo en la niñez: Evidencia del programa Una Laptop por Niño. Banco Interamericano de Desarrollo. Available at http://dide.minedu.gob.pe/xmlui/handle/123456789/676 [24 July 2014].

Cuban, Larry (2001), Oversold and Underused: Computers in the Classroom. Cambridge, MA: Harvard University Press.

Departamento de Monitoreo y Evaluación del Plan Ceibal (2011), Segundo informe nacional de monitoreo y evaluación del Plan Ceibal 2010. Departamento de Monitoreo y Evaluación del Plan Ceibal.

Ellis, Rod, Basturkmen, Helen and Loewen, Shawn (2002), Doing Focus-on-Form. System, 30(4): 419-432.

Erickson, Gudrun and Lodeiro, Julieta (2012), Bedömning av språklig kompetens - En studie av samstämmigheten mellan Internationella språkstudien 2011 och svenska styrdokument. Stockholm: Skolverket.

European Commission (2012), First European Survey on Language Competences: Final Report. Available at http://ec.europa.eu/languages/eslc/docs/en/final-report-escl_en.pdf [17 December 2013].

Fält, Gunnar (2000), Spansk grammatik för universitet och högskolor. Lund: Studentlitteratur.

Figueredo, Lauren and Varnhagen, Connie K. (2006), Spelling and Grammar Checkers: Are They Intrusive? British Journal of Educational Technology, 37(5): 721-732.

Fleischer, Håkan (2013), En elev-en dator: kunskapsbildningens kvalitet och villkor i den datoriserade skolan. Available at http://www.diva-portal.org/smash/record.jsf?pid=diva2:663330 [24 July 2014].

Flower, Linda and Hayes, John R. (1981), A Cognitive Process Theory of Writing. College Composition and Communication, 32(4): 365-387.

Francia, Guadalupe and Riis, Ulla (2013), Lärare, elever och spanska som modernt språk: Styrkor och svagheter - möjligheter och hot. Fortbildningsavdelningen för skolans internationalisering, Uppsala universitet.

Fredholm, Kent (2014), ‘I Prefer to Think for Myself’: Upper Secondary School Pupils’ Attitudes towards Computer-Based Spanish Grammar Exercises. The IAFOR Journal of Education, 2(1): 90-122.

Fredholm, Kent El uso de traducción automática y otras estrategias de escritura digital en español como lengua extranjera. submitted.

Fredriksson, A. (2011), Marknaden och lärarna. Hur organiseringen av skolan påverkar lärares offentliga tjänstemannaskap. Rapport nr.: Göteborg Studies in Politics 123. Available at https://gupea.ub.gu.se/handle/2077/23913 [9 September 2012].

Garcia, Ignacio and Pena, Maria Isabel (2011), Machine Translation-Assisted Language Learning: Writing for Beginners. Computer Assisted Language Learning, 24(5): 471-487.

Gaspar, María del Pilar and Otañi, Isabel (2003), El papel de la gramática en la enseñanza de la escritura The Role of Grammar in Writing Instruction. Cultura y Educación, 15(1): 47-57.

Grönlund, Åke (2014), Att förändra skolan med teknik: Bortom ‘en dator per elev’. Örebro: Örebro universitet. Available at http://www.skl.se/MediaBinaryLoader.axd?MediaArchive_FileID=c4756896-6797-4b66-957f-f653dfe7e1f9&FileName=Bok+och+antologi+Unos+Uno+-+Att+f%c3%b6r%c3%a4ndra+med+teknik.pdf [21 March 2014].

Gunnarsson, Cecilia (2012), The Development of Complexity, Accuracy and Fluency in the Written Production of L2 French, 247-276, in: Housen, A., Kuiken, F., and Vedder, I. (Eds.), Dimensions of L2 Performance and Proficiency. Language Learning & Language Teaching. Amsterdam / Philadelphia: John Benjamins Publishing Company.

Hawisher, Gail E. and Selfe, Cynthia L. (1991), The Rhetoric of Technology and the Electronic Writing Class. College Composition and Communication, 42: 55-65.

Heift, Trude and Rimrott, Anne (2007), Learner Responses to Corrective Feedback for Spelling Errors in CALL. Elsevier: 196 – 213.

Hinkel, Eli (2002), Second Language Writers’ Text. Routledge. Available at http://dx.doi.org/10.4324/9781410602848 [14 November 2013].

Hyland, Ken (2013), Second Language Writing. Cambridge: Cambridge University Press.

Larson-Guenette, Julie (2013), ‘It’s Just Reflex Now’: German Language Learners’ Use of Online Resources. Die Unterrichtspraxis/Teaching German, 46(1): 62-74.

Leacock, Claudia, Chodorow, Martin, Gamon, Michael and Tetreault, Joel (2010), Automated Grammatical Error Detection for Language Learners. San Rafael, California: Morgan & Claypool.

Lennon, Paul (1990), Investigating Fluency in EFL: A Quantitative Approach. Language Learning, 40(3): 387-417.

Lodeiro, Julieta and Matti, Tomas (2014), Att tala eller inte tala spanska: En fördjupning av resultaten i spanska från Internationella språkstudien 2011. Stockholm: Skolverket.

López Rama, José and Luque Agulló, Gloria (2012), The Role of Grammar Teaching: From Communicative Approaches to the Common European Framework of Reference for Languages. Revista de Lingüística y Lenguas Aplicadas, 7(1). Available at http://ojs.cc.upv.es/index.php/rdlyla/article/view/1134 [22 May 2013].

Malvern, David D., Richards, Brian J., Chipere, Ngoni and Durán, Pilar (2004), Lexical Diversity and Language Development: Quantification and Assessment. Palgrave Macmillan.

Muncie, James (2002), Finding a Place for Grammar in EFL Composition Classes. ELT Journal, 56(2): 180-186.

Nassaji, Hossein and Fotos, Sandra (2004), Current Developments in Research on the Teaching of Grammar. Annual Review of Applied Linguistics, 2004(24): 126-145.

Niño, Ana (2009), Machine Translation in Foreign Language Learning: Language Learners’ and Tutors’ Perceptions of Its Advantages and Disadvantages. ReCALL, 21(2): 241-258.

O’Neill, Errol Marinus (2012), The Effect of Online Translators on L2 Writing in French. University of Illinois, Urbana-Champaign.

Polio, Charlene (2008), Research Methodology in Second Language Writing Research: The Case of Text-Based Studies, 91-115, in: Silva, T. and Matsuda, P. K. (Eds.), On Second Language Writing. New York; Abingdon, Oxon: Routledge.

Potter, Reva and Fuller, Dorothy (2008), My New Teaching Partner? Using the Grammar Checker in Writing Instruction. The English Journal, 98(1): 36-41.

Puranik, Cynthia S., Lombardino, Linda J. and Altmann, Lori JP (2008), Assessing the Microstructure of Written Language Using a Retelling Paradigm. American Journal of Speech-Language Pathology, 17(2): 107.

Rimrott, Anne and Heift, Trude (2005), Language Learners and Generic Spell Checkers in CALL. Calico Journal, 23(1): 17-48.

Roszak, Theodore (1994), The Cult of Information. A Neo-Luddite Treatise on High-Tech, Artificial Intelligence, and the True Art of Thinking. New York: Pantheon.

Sánchez, Miguel A. Martín (2008), El papel de la gramática en la enseñanza-aprendizaje de ELE. Ogigia. Revista electrónica de estudios hispánicos, 3: 29-41.

Scheuermann, Friedrich and Pedró, Francesc (Eds.) (2012), Assessing the Effects of ICT in Education: Indicators, Criteria and Benchmarks for International Comparisons. Available at http://190.11.224.74:8080/jspui/handle/123456789/1180 [12 July 2014].

Shapley, Kelly S., Sheehan, Daniel, Maloney, Catherine and Caranikas-Walker, Fanny (2010), Evaluating the Implementation Fidelity of Technology Immersion and Its Relationship with Student Achievement. The Journal of Technology, Learning, and Assessment, 9(4). Available at http://www.jtla.org [5 September 2012].

Silva, Tony (1993), Toward an Understanding of the Distinct Nature of L2 Writing: The ESL Research and Its Implications. TESOL Quarterly, 27(4): 657-677.

Silvernail, David L. and Gritter, Aaron K. (2007), Maine’s Middle School Laptop Program: Creating Better Writers. Gorham, ME: Maine Education Policy Research Institute. Available at http://www.sjchsdow.catholic.edu.au/documents/research_brief.pdf [27 May 2013].

Skolverket (2013a), Ämne - Moderna språk. Skolverket. Available at http://www.skolverket.se/forskola-och-skola/gymnasieutbildning/amnes-och-laroplaner/mod?subjectCode=MOD&lang=sv [16 April 2013].

Skolverket (2013b), Swedish Grades and How to Interpret Them. Available at http://www.skolverket.se/om-skolverket/andra-sprak-och-lattlast/in-english/2.7806/swedish-grades-and-how-to-interpret-them-1.208902 [5 March 2014].

Skolverket (2014a), Ämne - Moderna Språk (Gymnasieskolan). Skolverket. Available at http://www.skolverket.se/laroplaner-amnen-och-kurser/gym nasieutbildning/gymnasieskola/mod?tos=gy&subjectCode=MOD&lang=sv [12 March 2014].

Skolverket (2014b), Beskrivande data 2013 Förskola, skola och vuxenutbildning. Available at http://www.skolverket.se/om-skolverket/publikationer/visa-enskild-publikation?_xurl_=http%3A%2F%2Fwww5.skolverket.se %2Fwtpub%2Fws%2Fskolbok%2Fwpubext%2Ftrycksak%2FBlob%2Fpdf3211.pdf%3Fk%3D3211 [15 July 2014].

Steding, Sören (2009), Machine Translation in the German Classroom: Detection, Reaction, Prevention. Die Unterrichtspraxis/Teaching German, 42(2): 178-189.

Szmrecsányi, Benedikt (2004), On Operationalizing Syntactic Complexity. Jadt-04, 2: 1032–1039.

Tallvid, Martin and Hallerström, Helena (2009), En egen dator i skolarbetet - redskap för lärande? Utvärdering av projektet En-till-En i två grundskolor i Falkenbergs kommun. Delrapport 2. Göteborgs universitet, Falkenbergs kommun.

Vernon, Alex (2000), Computerized Grammar Checkers 2000: Capabilities, Limitations, and Pedagogical Possibilities. Computers and Composition, 17(3): 329-349.

Warschauer, Mark, Arada, Kathleen and Zheng, Binbin (2010), Laptops and Inspired Writing. Journal of Adolescent & Adult Literacy, 54(3): 221-223.

Wolfe-Quintero, Kate, Inagaki, Shunji and Kim, Hae-Young (1998), Second Language Development in Writing: Measures of Fluency, Accuracy, & Complexity. Honolulu, Hawai’i: Second Language Teaching & Curriculum Center, University of Hawai’i at Mānoa.

Yu, Guoxing (2009), Lexical Diversity in Writing and Speaking Task Performances. Applied Linguistics, 31(2): 236-259.

(2014), One Laptop per Child. Available at http://one.laptop.org/ [24 July 2014].

Web-sites Mentioned in the Article

https://translate.google.se/

http://www.lexikon24.nu/

www.screencast-o-matic.com